|

We’ve long recounted the bad news on law enforcement’s use of facial recognition software – how it misidentifies people and labels them as criminals, particularly people of color. But there is good news on this subject for once: the Detroit Police Department has reached a settlement with a man falsely arrested on the basis of a bad match from facial recognition technology (FRT) that includes what many civil libertarians are hailing as a new national standard for police.

The list of injustices from false positives from FRT has grown in recent years. We told the story of Randall Reid, a Black man in Georgia, arrested for the theft of luxury goods in Louisiana. Even though Reid had never been to Louisiana, he was held in jail for a week. We told the story of Porchia Woodruff, a Detroit woman eight months pregnant, who was arrested in her driveway while her children cried. Her purported crime was – get this – a recent carjacking. Woodruff had to be rushed to the hospital after suffering contractions in her holding cell. Detroit had a particularly bad run of such misuses of facial recognition in criminal investigations. One of them was the arrest of Robert Williams in 2020 for the 2018 theft of five watches from a boutique store in which the thief was caught on a surveillance camera. Williams spent 30 hours in jail. Backed by the American Civil Liberties Union, the ACLU of Michigan, and the University of Michigan Civil Rights Litigation Initiative, Williams sued the police for wrongful arrest. In an agreement blessed by a federal court in Michigan, Williams received a generous settlement from the Detroit police. What is most important about this settlement agreement are the new rules Detroit has embraced. From now on:

Another series of reforms impose discipline on the way in which lineups of suspects or their images unfold. When witnesses perform lineup identifications, they may not be told that FRT was used as an investigative lead. Witnesses must report how confident they are about any identification. Officers showing images to a witness must themselves not know who the real suspect is, so they don’t mislead the witness with subtle, non-verbal clues. And photos of suspects must be shown one at a time, instead of showing all the photos at once – potentially leading a witness to select the one image that merely has the closest resemblance to the suspect. Perhaps most importantly, Detroit police officers will be trained on the proper uses of facial recognition and eyewitness identification. “The pipeline of ‘get a picture, slap it in a lineup’ will end,” Phil Mayor, a lawyer for the ACLU of Michigan told The New York Times. “This settlement moves the Detroit Police Department from being the best-documented misuser of facial recognition technology into a national leader in having guardrails in its use.” PPSA applauds the Detroit Police Department and ACLU for crafting standards that deserve to be adopted by police departments across the United States. George Orwell wrote that in a time of deceit, telling the truth is a revolutionary act.

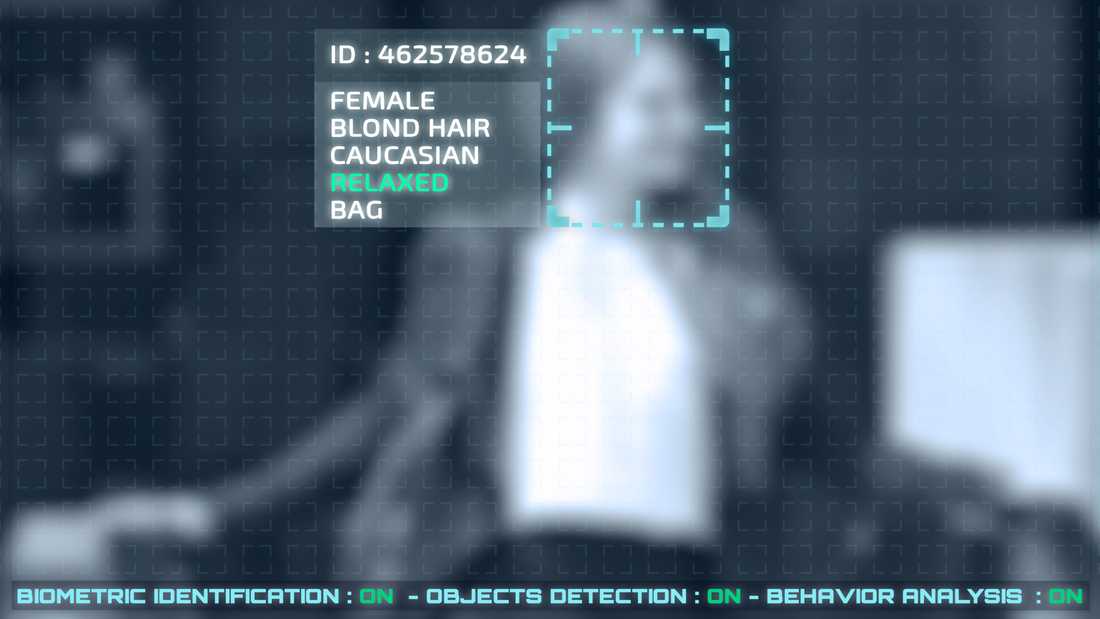

Revolutionary acts of truth-telling are becoming progressively more dangerous around the world. This is especially true as autocratic countries and weak democracies purchase AI software from China to weave together surveillance technology to comprehensively track individuals, following them as they meet acquaintances and share information. A piece by Abi Olvera posted by the Bulletin of Atomic Scientists describes this growing use of AI to surveil populations. Olvera reports that by 2019, 56 out of 176 countries were already using artificial intelligence to weave together surveillance data streams. These systems are increasingly being used to analyze the actions of crowds, track individuals across camera views, and pierce the use of masks or scramblers intended to disguise faces. The only impediment to effective use of this technology is the frequent Brazil-like incompetence of domestic intelligence agencies. Olvera writes: “Among other things, frail non-democratic governments can use AI-enabled monitoring to detect and track individuals and deter civil disobedience before it begins, thereby bolstering their authority. These systems offer cash-strapped autocracies and weak democracies the deterrent power of a police or military patrol without needing to pay for, or manage, a patrol force …” Olvera quotes AI surveillance expert Martin Beraja that AI can enable autocracies to “end up looking less violent because they have better technology for chilling unrest before it happens.” Olivia Solon of Bloomberg reports on the uses of biometric identifiers in Africa, which are regarded by the United Nations and World Bank as a quick and easy way to establish identities where licenses, passports, and other ID cards are hard to come by. But in Uganda, Solon reports, President Yoweri Museveni – in power for 40 years – is using this system to track his critics and political opponents of his rule. Used to catch criminals, biometrics is also being used to criminalize Ugandan dissidents and rival politicians for “misuse of social media” and sharing “malicious information.” The United States needs to lead by example. As our facial recognition and other systems grow in ubiquity, Congress and the states need to demonstrate our ability to impose limits on public surveillance, and legal guardrails for the uses of the sensitive information they generate. Suspect: “We Have to Follow the Law. Why Don’t They?" Facial recognition software is useful but fallible. It often leads to wrongful arrests, especially given the software’s tendency to produce false positives for people of color.

We reported in 2023 on the case of Randall Reid, a Black man in Georgia, arrested and held for a week by police for allegedly stealing $10,000 of Chanel and Louis Vuitton handbags in Louisiana. Reid was traveling to a Thanksgiving dinner near Atlanta with his mother when he was arrested. He was three states and seven hours away from the scene of this crime in a state in which he had never set foot. Then there is the case of Portia Woodruff, a 32-year-old Black woman, who was arrested in her driveway for a recent carjacking and robbery. She was eight months pregnant at the time, far from the profile of the carjacker. She suffered great emotional distress and suffered spasms and contractions while in jail. Some jurisdictions have reacted to the spotty nature of facial recognition by requiring every purported “match” to be evaluated by a large team to reduce human bias. Other jurisdictions, from Boston to Austin and San Francisco, responded to the technology’s flaws by banning the use of this technology altogether. The Washington Post’s Douglas MacMillan reports that officers of the Austin Police Department have developed a neat workaround for the ban. Austin police asked law enforcement in the nearby town of Leander to conduct face searches for them at least 13 times since Austin enacted its ban. Tyrell Johnson, a 20-year-old man who is a suspect in a robbery case due to a facial recognition workaround by Austin police told MacMillan, “We have to follow the law. Why don’t they?” Other jurisdictions are accused of working around bans by posting “be on the lookout” flyers in other jurisdictions, which critics say is meant to be picked up and run through facial recognition systems by other police departments or law enforcement agencies. MacMillian’s interviews with defense lawyers, prosecutors, and judges revealed the core problem with the use of this technology – employing facial recognition to generate leads but not evidence. They told him that prosecutors are not required in most jurisdictions to inform criminal defendants they were identified using an algorithm. This highlights the larger problem with high-tech surveillance in all its forms: improperly accessed data, reviewed without a warrant, can allow investigators to work backwards to incriminate a suspect. Many criminal defendants never discover the original “evidence” that led to their prosecution, and thus can never challenge the basis for their case. This “backdoor search loophole” is the greater risk, whether one is dealing with databases of mass internet communications or facial recognition. Thanks to this loophole, Americans can be accused of crimes but left in the dark about how the cases against them were started. Wired reports that police in northern California asked Parabon NanoLabs to run a DNA sample from a cold case murder scene to identify the culprit. Police have often run DNA against the vast database of genealogical tests, cracking cold cases like the Golden State Killer, who murdered at least 13 people.

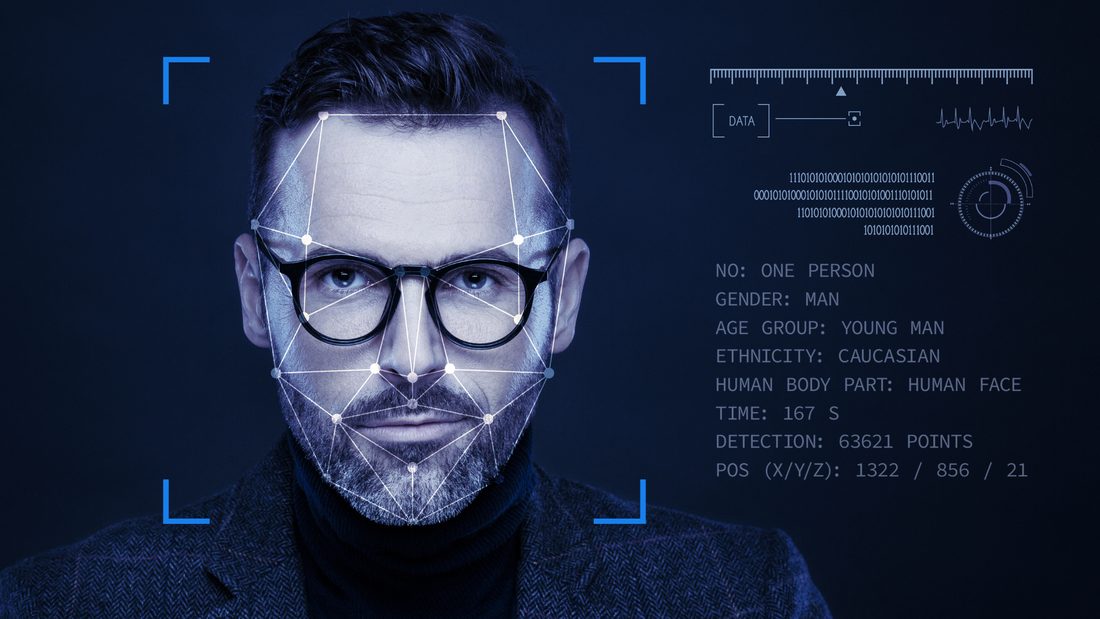

But what Parabon NanoLabs did for the police in this case was something entirely different. The company produced a 3D rendering of a “predicted face” based on the genetic instructions encoded in the sample’s DNA. The police then ran it against facial recognition software to look for a match. Scientists are skeptical that this is an effective tool given that Parabon’s methods have not been peer-reviewed. Even the company’s director of bioinformatics, Ellen Greytak, told Wired that such face predictions are closer in accuracy to a witness description rather than the exact replica of a face. With the DNA being merely suggestive – Greytak jokes that “my phenotyping can tell you if your suspect has blue eyes, but my genealogist can tell you the guy’s address” – the potential for false positives is enormous. Police multiply that risk when they run a predicted face through the vast database of facial recognition technology (FRT) algorithms, technology that itself is far from perfect. Despite cautionary language from technology producers and instructions from police departments, many detectives persist in mistakenly believing that FRT returns matches. Instead, it produces possible candidate matches arranged in the order of a “similarity score.” FRT is also better with some types of faces than others. It is up to 100 times more likely to misidentify Asian and Black people than white men. The American Civil Liberties Union, in a thorough 35-page comment to the federal government on FRT, biometric technologies, and predictive algorithms, noted that defects in FRT are likely to multiply when police take a low-quality image and try to brighten it, or reduce pixelation, or otherwise enhance the image. We can only imagine the Frankenstein effect of mating a predicted face with FRT. As PPSA previously reported, rights are violated when police take a facial match not as a clue, but as evidence. This is what happened when Porcha Woodruff, a 32-year-old Black woman and nursing student in Detroit, was arrested on her doorstep while her children cried. Eight months pregnant, she was told by police that she had committed recent carjackings and robberies – even though the woman committing the crimes in the images was not visibly pregnant. Woodruff went into contractions while still in jail. In another case, local police executed a warrant by arresting a Georgia man at his home for a crime committed in Louisiana, even though the arrestee had never set foot in Louisiana. The only explanation for such arrests is sheer laziness, stupidity, or both on the part of the police. As ACLU documents, facial recognition forms warn detectives that a match “should only be considered an investigative lead. Further investigation is needed to confirm a match through other investigative corroborated information and/or evidence. INVESTIGATIVE LEAD, NOT PROBABLE CAUSE TO MAKE AN ARREST.” In the arrests made in Detroit and Georgia, police had not performed any of the rudimentary investigative steps that would have immediately revealed that the person they were investigating was innocent. Carjacking and violent robberies are not typically undertaken by women on the verge of giving birth. The potential for replicating error in the courtroom would be multiplied by showing a predicted face to an eyewitness. If a witness is shown a predicted face, that could easily influence the witness’s memory when presented with a line-up. We understand that an investigation might benefit from knowing that DNA reveals that a perp has blue eyes, allowing investigators to rule out all brown- and green-eyed suspects. But a predicted face should not be enough to search through a database of innocent people. In fact, any searches of facial recognition databases should require a warrant. As technology continues to push the boundaries, states need to develop clear procedural guidelines and warrant requirements that protect constituents’ constitutional rights. Woman, Eight Months Pregnant, Arrested for Carjacking and RobberyPPSA has long followed the dysfunctionality of facial recognition technology and police overreliance on it to identify suspects. As we reported in January, three common facial recognition tools failed every time they were confronted with images of 16 pairs of people who resembled one another.

This technology is most apt to make mistakes with people of color and with women. Stories had piled up about Americans – overwhelmingly Black men – who have been mistakenly arrested, including one Georgia man arrested and held for a week for stealing handbags in Louisiana. That innocent man had never set foot in Louisiana. Now we have the first woman falsely arrested for the crime of resembling a scofflaw. Porcha Woodruff, a 32-year-old Black woman and nursing student in Detroit, was arrested at her doorstep while her children cried. Woodruff, eight months pregnant, was told by police that she was being arrested for a recent carjacking and robbery. “I was having contractions in the holding cell,” Woodruff told The New York Times’ Kashmir Hill. “My back was sending me sharp pains. I was having spasms.” After being released on bond, Woodruff had to go straight to the hospital. The obvious danger of this technology is that it tends to misidentify people, a problem exacerbated by distinctly lazy investigations by police. We see a larger danger: as public and private cameras are increasingly networked, and law enforcement agencies can fully track our movements, this technology will mistakenly put some Americans at the scene of a crime. And if the technology improves and someday works flawlessly? We can be assured of being followed throughout our day – who we meet with, where we worship or engage in political activity or protest – with perfect accuracy. In “A Scanner Darkly,” a 2006 film based on a Philip K. Dick novel, Keanu Reeves plays a government undercover agent who must wear a “scramble suit” – a cloak that constantly alters his appearance and voice to avoid having his cover blown by ubiquitous facial recognition surveillance.

At the time, the phrase “ubiquitous facial recognition surveillance” was still science fiction. Such surveillance now exists throughout much of the world, from Moscow, to London, to Beijing. Scramble suits do not yet exist, and sunglasses and masks won’t defeat facial recognition software (although “universal perturbation” masks sold on the internet purport to defeat facial tracking). Now that companies like Clearview AI have reduced human faces to the equivalent of personal ID cards, the proliferation of cameras linked to robust facial recognition software has become a privacy nightmare. A year ago, PPSA reported on a technology industry presentation that showed how stationary cameras could follow a man, track his movements, locate people he knows, and compare all that to other data to map his social networks. Facial recognition doesn’t just show where you went and what you did: it can be a form of “social network analysis,” mapping networks of people associated by friendship, work, romance, politics, and ideology. Nowhere is this capability more robust than in the People’s Republic of China, where the surveillance state has reached a level of sophistication worthy of the overused sobriquet “Orwellian.” A comprehensive net of data from a person’s devices, posts, searches, movements, and contacts tells the government of China all it needs to know about any one of 1.3 billion individuals. That is why so many civil libertarians are alarmed by the responses to an ACLU Freedom of Information (FOIA) lawsuit. The Washington Post reports that government documents released in response to that FOIA lawsuit show that “FBI and Defense Department officials worked with academic researchers to refine artificial-intelligence techniques that could help in the identification or tracking of Americans without their awareness or consent.” The Intelligence Advanced Research Projects agency, a research arm of the intelligence community, aimed in 2019 to increase the power of facial recognition, “scaling to support millions of subjects.” Included in this is the ability to identify faces from oblique angles, even from a half-mile away. The Washington Post reports that dozens of volunteers were monitored within simulated real-world scenarios – a subway station, a hospital, a school, and an outdoor market. The faces and identities of the volunteers were captured in thousands of surveillance videos and images, some of them captured by drone. The result is an improved facial recognition search tool called Horus, which has since been offered to at least six federal agencies. An audit by the Government Accountability Office found in 2021 that 20 federal agencies, including the U.S. Post Office and the Fish and Wildlife Service, use some form of facial recognition technology. In short, our government is aggressively researching facial recognition tools that are already used by the Russian and Chinese governments to conduct the mass surveillance of their peoples. Nathan Wessler, deputy director of the ACLU, said that the regular use of this form of mass surveillance in ordinary scenarios would be a “nightmare scenario” that “could give the government the ability to pervasively track as many people as they want for as long as they want.” As we’ve said before, one does not have to infer a malevolent intention by the government to worry about its actions. Many agency officials are desperate to catch bad guys and keep us safe. But they are nevertheless assembling, piece-by-piece, the elements of a comprehensive surveillance state. Facial recognition technology has proven to be useful but fallible. It relies on probabilities, not certainties, algorithms measuring the angle of a nose or the tilt of an eyebrow. It has a higher chance of misidentifying women and people of color. And in the hands of law enforcement, it can be a dangerous tool for mass surveillance and wrongful arrest.

It should come as no surprise, then, that police mistakenly arrested yet another man using facial recognition technology. Randall Reid, a Black man in Georgia, was recently arrested and held for a week by police for allegedly stealing $10,000 of Chanel and Louis Vuitton handbags in Louisiana. Reid was traveling to a Thanksgiving dinner with his mother when he was arrested three states and seven hours away from the scene of the crime. Despite Reid’s claim he’d never even been to Louisiana, facial recognition software identified Reid as a suspect in the theft of the luxury purses. That was all the police needed to hold him for close to a week in jail, according to The New Orleans Advocate. Gizmodo reports, “numerous studies show the technology is especially inaccurate when identifying people of color and women compared to identifications of white men. Some law enforcement officials regularly acknowledge this fact, saying facial recognition is only suitable to generate leads and should never be used as the sole basis for arrest warrants. But there are very few rules governing the technology. Cops often ignore that advice and take face recognition at face value.” When scientists tested three facial recognition tools with 16 pairs of doppelgangers – people with extraordinary resemblances – the computers found all of them to be a match. In the case of Reid, however, he was 40 pounds lighter than the criminal caught on camera. In Metairie, the New Orleans suburb where Reid was accused of theft, law enforcement officials can use facial recognition without legal restriction. In most cases, “prosecutors don’t even have to disclose that facial recognition was involved in investigations when suspects make it to court.” Elsewhere in Louisiana, there is no regulation. A state bill to restrict use of facial recognition died in 2021 in committee. Some localities use facial recognition just to generate leads. Others take it and run with it, using it more aggressively to pursue supposed criminals. As facial recognition technology proliferates, from Ring cameras to urban CCTVs, states must put guardrails around the use of this technology. If facial recognition tech is to be used, it must be one tool for investigators, not a sole cause for arrest and prosecution. Police should use other leads and facts to generate probable cause for arrest. And legal defense must always be notified when facial recognition technology was used to generate a case. It may be decades before the technical flaws in facial recognition are resolved. Even then, we should ensure that the technology is closely governed and monitored. Facial recognition software is a problem when it doesn’t work. It can conflate the innocent with the guilty if the two have only a passing resemblance. In one test, it identified 27 Members of Congress as arrested criminals. It is also apt to work less well on people of color, leading to false arrests.

But facial recognition is also problem when it does work. One company, Vintra, has software that follows a person camera by camera to track any person he or she may interact with along the way. Another company, Clearview AI, identifies a person and creates an instant digital dossier on him or her with data scrapped from social media platforms. Thus, facial recognition software does more than locate and identify a person. It has the power to map relationships and networks that could be personal, religious, activist, or political. Major Neill Franklin (Ret.) Maryland State Police and Baltimore Police Department, writes that facial recognition software has been used to violate “the constitutionally protected rights of citizens during lawful protest.” False arrests and crackdowns on dissenters and protestors are bound to result when such robust technology is employed by state and local law enforcement agencies with no oversight or governing law. The spread of this technology takes us inch by inch closer to the kind of surveillance state perfected by the People’s Republic of China. It is for all these reasons that PPSA is heartened to see Rep. Ted Lieu join with Reps. Shelia Jackson Lee, Yvette Clark and Jimmy Gomez on Thursday to introduce the Facial Recognition Act of 2022. This bill would place strong limits and prohibitions on the use of facial recognition technology (FRT) in law enforcement. Some of the provisions of this bill would:

The introduction of this bill is the result of more than a year of hard work and fine tuning by Rep. Lieu. This bill deserves widespread recognition and bipartisan support. |

Categories

All

|

RSS Feed

RSS Feed